40 Technical Interview Rubrics by Seniority Level [Expert-Verified 2026]

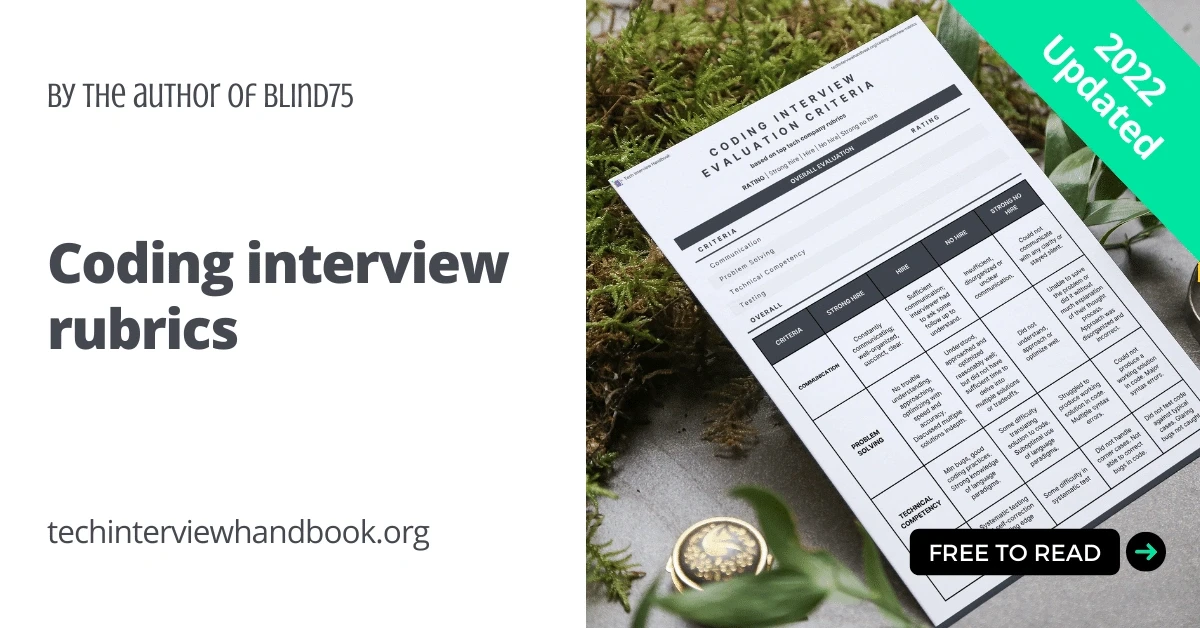

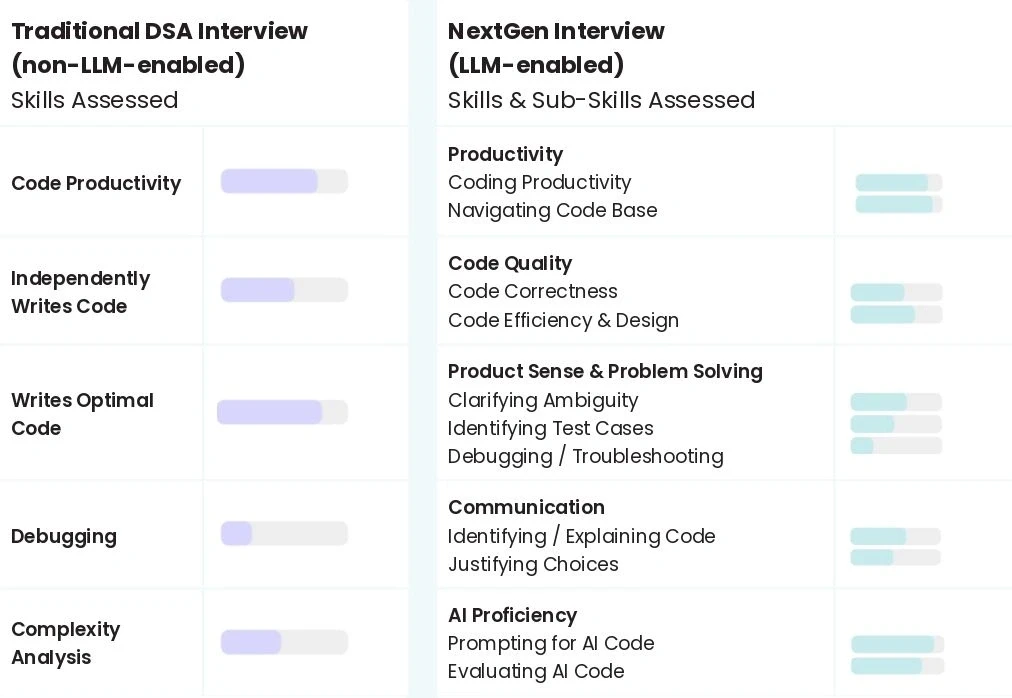

20 Min Read

Key Takeaways (TL;DR)

Structured rubrics eliminate guesswork in technical hiring. Clear evaluation criteria create consistent assessments across all interviewers and candidates.

Technical interviews evaluate 15+ dimensions beyond coding. Communication, problem-solving, autonomy, and cultural fit predict job performance as much as technical skills.

Expectations scale dramatically by seniority level. Junior engineers need basic problem-solving abilities while senior engineers must demonstrate flawless execution and leadership capabilities.

Communication and collaboration skills separate exceptional candidates from competent ones. Effective communicators increase team productivity by 25%.

Observable behaviors matter more than subjective impressions. Rubrics focus on measurable signals like code quality, testing strategies, and feedback response patterns rather than gut feelings.

Structured interviews predict job performance three times better than unstructured ones. This stark reality explains why interview rubrics have become essential tools for technical hiring teams seeking consistent, fair, and effective evaluation methods.

Technical interviews challenge even experienced hiring managers. Interviewers screen numerous candidates for single roles, making standardized evaluation criteria crucial for fair comparison. Using rubrics in hiring improves accuracy by 34% while reducing bias. Meanwhile, 65% of technical recruiters acknowledge bias in their current processes, highlighting the urgent need for structured evaluation frameworks.

Top tech companies use remarkably similar evaluation criteria. FAANG companies organize their coding interview rubrics into four primary dimensions: Communication, Problem Solving, Technical Competency, and Testing. This consistency across industry leaders proves the effectiveness of well-designed rubric systems.

Structured rubrics deliver measurable benefits beyond bias reduction. Teams using standardized evaluation processes increase productivity by 25%. Organizations with established technical interview frameworks have conducted nearly 100,000 successful interviews for companies like Databricks, MongoDB, and Flatiron Health.

This article provides 40 expert-verified interview rubrics organized by seniority level. Each scoring template addresses specific competencies required across experience levels, from entry-level developers to senior architects. These structured evaluation tools create predictable, fair, and effective technical assessments for both interviewers and candidates.

Communication Rubric

Image Source: Exponent

Technical interviews assess communication skills almost as rigorously as coding ability. Effective communication increases team productivity by 25%, making this competency crucial for interview evaluation frameworks.

Communication expectations by seniority

Junior engineers must demonstrate basic clarification skills and explain their approach clearly. Mid-level engineers should articulate tradeoffs and maintain constant communication during implementation. Senior engineers need exceptional thought organization, precise communication, and minimal interviewer prompting.

Strong candidates across all levels share their problem-solving process transparently. They use specific technical vocabulary—discussing "instance variables" and "enumerating elements" rather than vague descriptions like "doing something with this thing here".

Observable behaviors in interviews

Effective communicators demonstrate these measurable behaviors:

Ask appropriate clarifying questions before coding

Outline high-level approach and structure

Explain rationale and tradeoffs for decisions

Maintain continuous narration during implementation

Use precise programming terminology

Communication scoring typically ranges from "strong no hire" (remained silent or communicated unclearly) to "strong hire" (thorough, well-organized, succinct explanation of thought process).

Common pitfalls in communication

Technical competency alone cannot overcome communication failures. Critical mistakes include:

Poor preparation: Skipping introductions or speaking too softly—interviewers form impressions within the first minute.

Disorganized explanations: Jumping between ideas or avoiding structured frameworks like STAR (Situation-Task-Action-Result).

Context misalignment: Failing to adapt between high-context communication (reading between lines) and low-context communication (explicit, direct statements).

False confidence: Attempting to bluff rather than admitting knowledge gaps—interviewers value candidates who honestly say "I don't know".

Candidates should prepare concise self-introductions, establish energy and clarity immediately, and follow structured approaches to technical explanations.

Problem Solving Rubric

Image Source: Exponent

Problem-solving ability drives technical interview success more than any other single factor. Effective rubrics evaluate how candidates approach complex challenges, not just whether they find correct answers.

Problem-solving depth by level

Junior engineers should develop working solutions with adequate organization. They often struggle with optimization but can demonstrate basic problem-solving logic. Mid-level engineers must find well-thought-out solutions with time to discuss alternatives. Senior engineers demonstrate exceptional problem decomposition skills—solving problems systematically while evaluating multiple approaches and trade-offs.

Strong candidates across all levels ask clarifying questions before coding, approach problems systematically, and determine time/space complexity accurately without major hints.

Trade-off analysis and optimization

Optimization problems reveal a candidate's ability to balance competing factors:

Time complexity: Algorithm execution speed

Space complexity: Memory usage requirements

Code readability: Clarity and maintainability

Strong candidates identify the Best Theoretical Time Complexity (BTTC) for solutions and recognize when they cannot improve it. They articulate trade-offs clearly, explaining why they chose specific data structures or algorithms over alternatives. For example, explaining why a hash table provides O(1) lookup time at the cost of additional memory demonstrates solid optimization awareness.

Scoring examples

Most companies use a four or five-point scale for problem-solving assessment:

Strong No Hire: Unable to solve the problem or approached randomly without explanation

Leaning No Hire: Showed basic problem-solving signals only

Leaning Hire: Met all basic expectations but lacked time for advanced considerations

Strong Hire: Demonstrated exceptional problem-solving with time for trade-offs, alternatives, and extensions

Effective scoring rubrics evaluate observable behaviors—not just problem resolution, but approach quality, alternative consideration, and reasoning communication throughout the process.

Technical Competency Rubric

Image Source: Tech Interview Handbook

Technical competency assessment evaluates coding ability beyond problem-solving. This dimension focuses on how candidates implement solutions, reflecting their real-world programming capabilities.

Code fluency by experience

Junior engineers should demonstrate basic syntax knowledge with few errors, though they might struggle with language-specific features. Mid-level engineers must show comfort with language constructs and produce clean code with minimal syntax issues. Senior engineers are expected to write flawless code, demonstrating exemplary knowledge of language paradigms and effortlessly comparing multiple coding approaches.

Technical fluency follows a 4-point scoring scale:

Strong Hire (4): Working, clean code with no syntax errors and outstanding understanding of language paradigms

Leaning Hire (3): Working code with few syntax errors and minor difficulties using language features

Leaning No Hire (2): Code with some syntax errors, struggling with naive solutions

Strong No Hire (1): Non-working solution with major syntax/logic errors

Use of language features

Strong candidates demonstrate command over language-specific constructs. They utilize appropriate abstractions, apply standard libraries effectively, and implement code that follows the idiomatic patterns of their chosen language.

Implementation quality

Implementation quality signals include:

Clean, straightforward code without unnecessary repetition

Proper variable naming and consistent formatting

Adherence to DRY (Don't Repeat Yourself) principles

Interviewers assess whether candidates can translate discussed solutions into working code with minimal bugs. Advanced candidates frequently compare several coding approaches and demonstrate strong knowledge of both their primary language and broader programming paradigms.

These rubrics enable consistent evaluation across multiple interviewers, ensuring every candidate receives fair assessment based on observable behaviors rather than personal bias.

Testing and Debugging Rubric

Image Source: Karat

Debugging interviews compress weeks of real engineering behavior into 45-60 minutes. These sessions reveal how candidates perform under pressure when things go wrong.

Testing strategies by level

Junior engineers should demonstrate basic test case validation and bug identification skills. Mid-level engineers must exhibit methodical debugging approaches with structured test suites. Senior engineers are expected to implement testing strategies that anticipate edge cases before they arise.

Strong candidates exhibit logical search strategies that narrow the problem space efficiently. They systematically verify code correctness by stepping through each line, essentially "acting like a debugger".

Corner case handling

Corner case identification serves as a key differentiator in technical interviews. Interviewers score candidates from "strong no hire" (did not test corner cases) to "strong hire" (identified all relevant edge conditions without prompting).

Effective candidates think beyond happy path scenarios. They consider empty inputs, boundary conditions, and unexpected data types. This systematic approach demonstrates engineering maturity that coding challenges alone cannot reveal.

Self-correction signals

Interviewers pay attention to how candidates behave when progress slows. Strong candidates pause and reframe the problem, summarize what they know, and identify the highest remaining uncertainty.

Recovery behavior often receives heavier scoring weight than uninterrupted progress. High-signal recovery includes stating the most likely root cause with confidence level, supporting evidence, and next steps if additional time were available.

The ability to debug systematically under observation predicts real-world problem-solving capability better than perfect initial implementations.

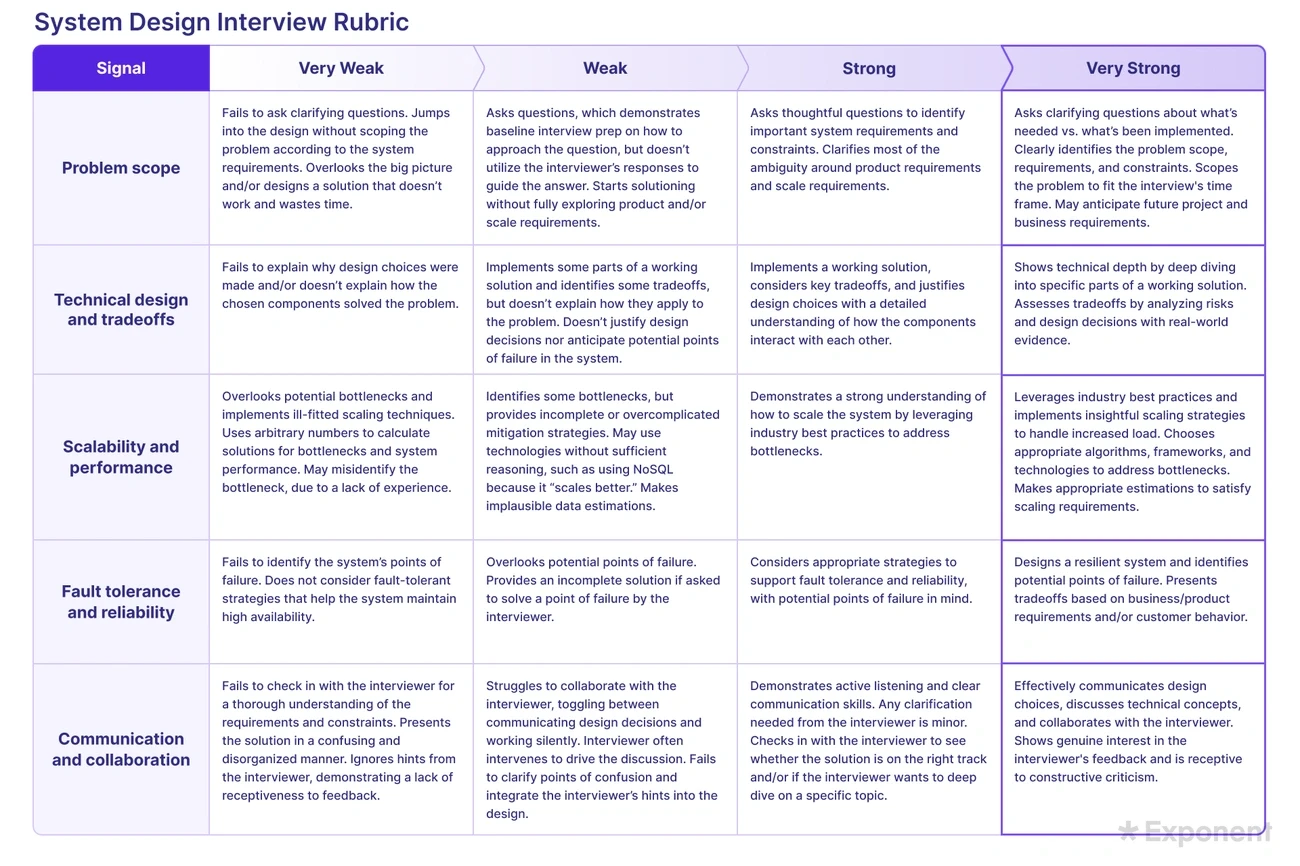

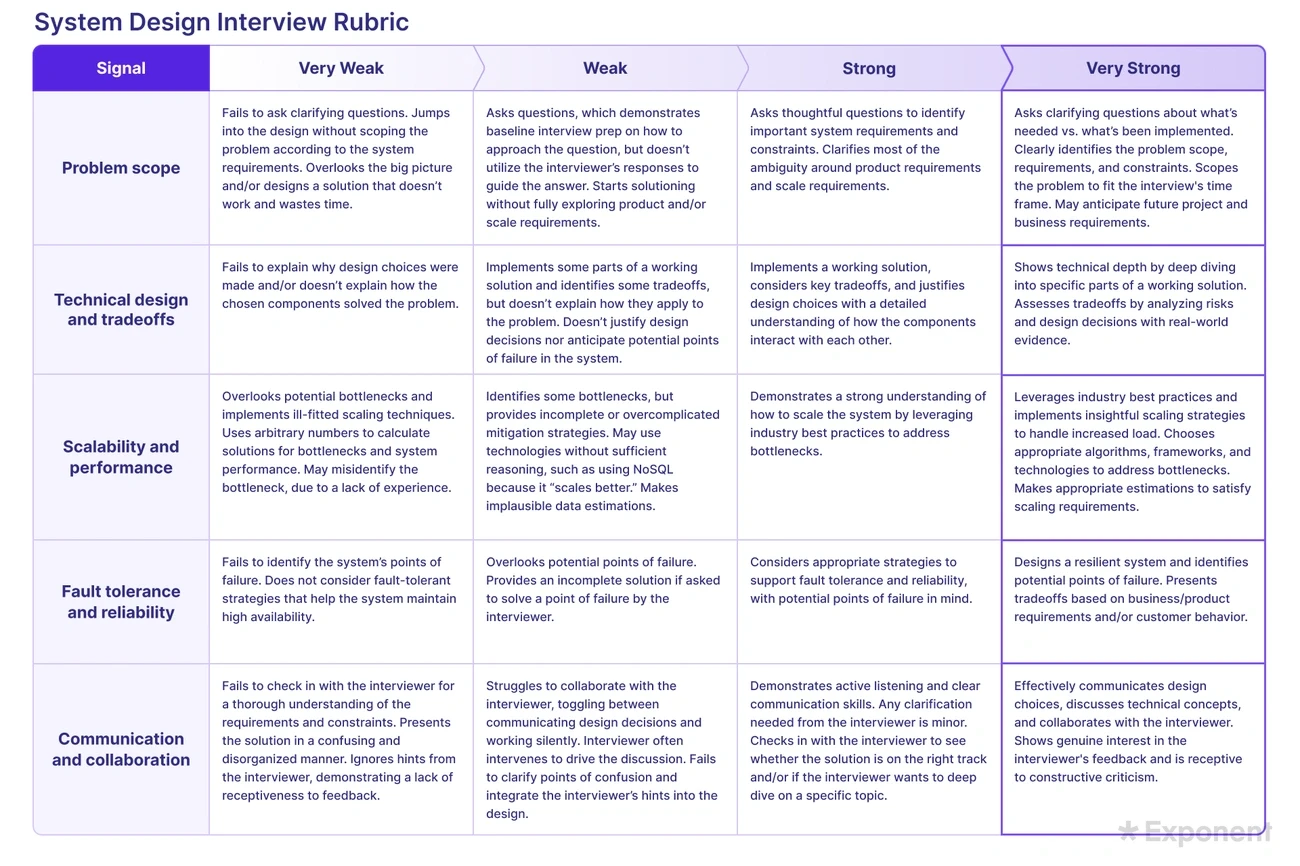

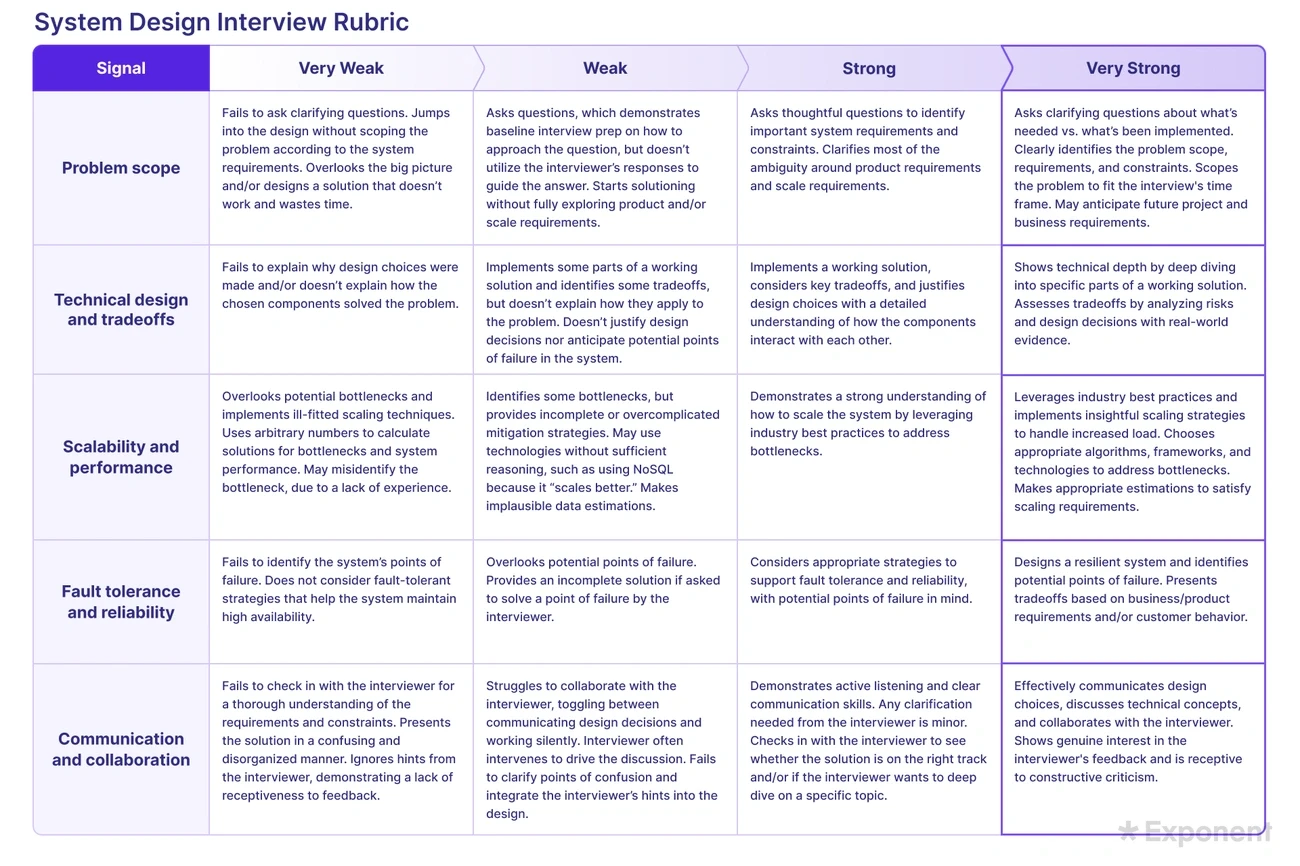

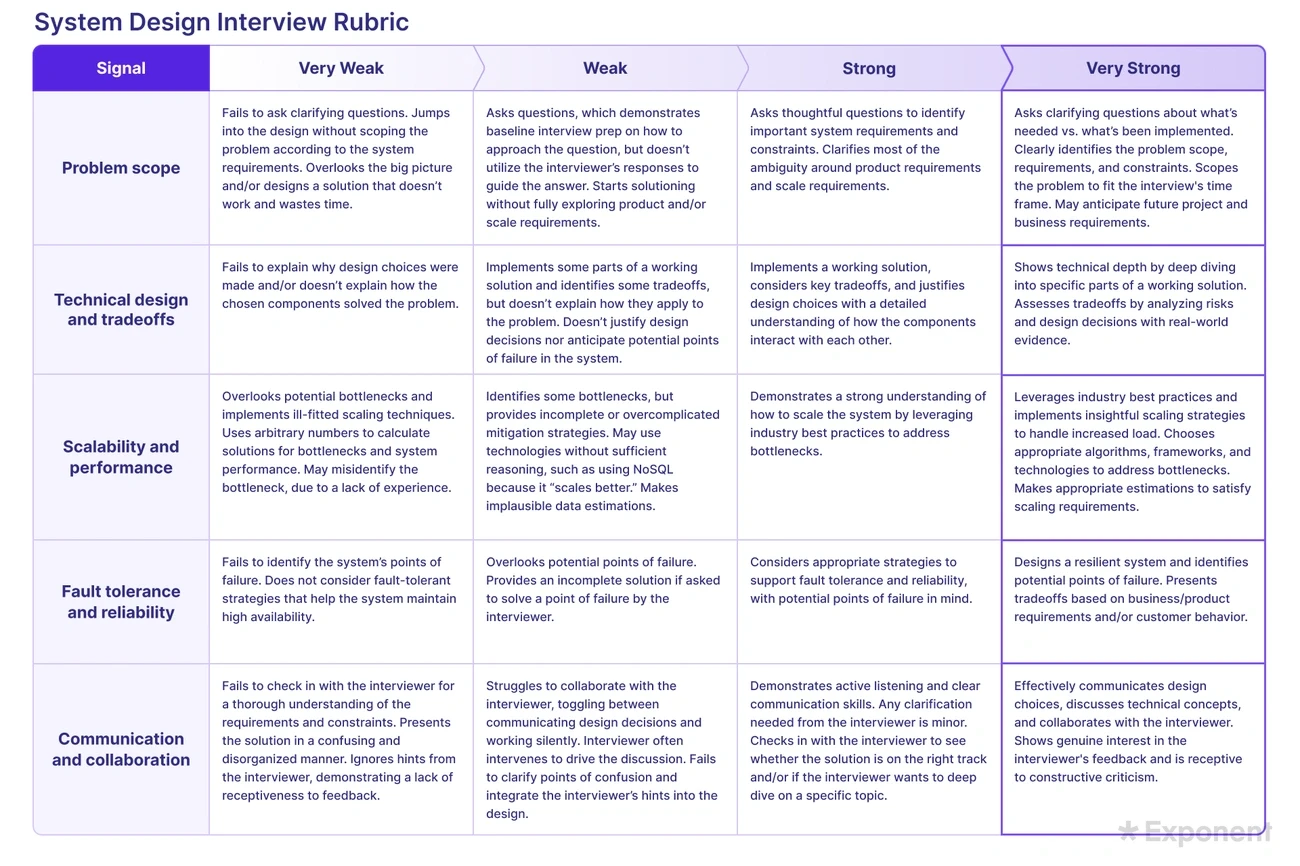

System Design Rubric

Image Source: Exponent

System design interviews assess entirely different dimensions than coding exercises. They evaluate a candidate's ability to architect scalable, resilient systems. These assessments reveal engineering maturity that coding challenges alone cannot surface.

Design expectations by seniority

Expectations shift markedly across three dimensions as candidates progress through career levels. Mid-level engineers need breadth of knowledge—demonstrating general familiarity with various system design principles. Senior roles require depth—showing expertise in specific technical areas. Staff-level engineers must exhibit profound depth in multiple domains, offering novel insights rather than textbook answers.

Proactiveness increases with seniority. Mid-level candidates respond to questions. Staff-level engineers actively shape the dialog by identifying challenges before being prompted.

Abstraction and modularity

Strong candidates demonstrate clear separation of concerns through well-structured components with clean interfaces. Junior engineers typically produce functional but bolted-together designs. Senior engineers create elegantly structured components with thorough interaction models.

Scalability considerations

Effective scalability discussions include both vertical scaling (upgrading existing machines) and horizontal scaling (adding more servers) approaches. Senior candidates typically propose strategies like load balancing, caching, and database sharding to handle growth.

System design interviews evaluate judgment more than diagrams. Interviewers stop asking "Does this work?" and start asking "Do I trust this person's judgment?"

Autonomy Rubric

Image Source: Medium Engineering

Autonomy separates exceptional engineering candidates from average ones in technical interviews. Effective interview rubrics must evaluate how candidates work independently and own problems throughout the assessment process.

Initiative and ownership

Initiative reflects a candidate's ability to identify and act on opportunities without prompting. Self-starters exhibit proactive problem identification, willingness to take calculated risks, and persistence when facing obstacles. Junior candidates should demonstrate basic problem ownership. Senior candidates must show they can identify opportunities others might miss.

Decision-making independence

Interviewers score autonomy on a five-point scale:

✅ Strong No: Requires hand-holding through every step, refusing to make decisions without interviewer consent

✅ No: Needs significant support, seeks approval for each decision

✅ Mixed: Works independently but regularly seeks validation in ways that abdicate responsibility

✅ Yes: Confidently owns decisions, demonstrates strong independent work capability

✅ Strong Yes: Controls interview cadence, seeking guidance only when appropriate while explaining rationale

Examples of autonomy

Junior candidates should use interviewers as resources while maintaining problem ownership. Mid-level engineers should demonstrate the ability to make decisions independently within scope. Senior candidates control interview pacing, present solutions with minimal prompting, and explain their decision-making process without being asked.

Autonomy manifests through candidates who break problems down independently, request clarification only when necessary, and make decisions confidently without seeking constant validation.

Code Fluency Rubric

Image Source: Medium Engineering

Code fluency represents a critical evaluation dimension in technical interviews. Interviewers assess not just what candidates build, but how elegantly they construct it.

Syntax and idiomatic usage

Code fluency varies dramatically across seniority levels. Junior candidates should demonstrate basic language constructs like loops and conditionals with few syntax errors. Senior engineers must code "without any significant pauses" and write "idiomatic code by default". Strong candidates translate thought into code effortlessly, choosing sensible variable names throughout.

Standard library knowledge

Effective engineers demonstrate familiarity with their language's standard libraries instead of reimplementing existing functionality. Candidates earning "Strong Yes" ratings typically "use standard library functions and describe their behavior when asked". Interview rubrics should explicitly evaluate whether candidates use built-in functions appropriately or reinvent solutions unnecessarily.

Code readability

Readability differentiates average from exceptional candidates. Clearly organized code with descriptive variable names signals strong implementation quality. As one interview rubric template notes, "If the candidate gets confused about their own code, then the interviewer can conclude that the code is confusing". The best software engineer interview prep emphasizes not just correctness but clarity—ensuring code remains maintainable long after the interview concludes.

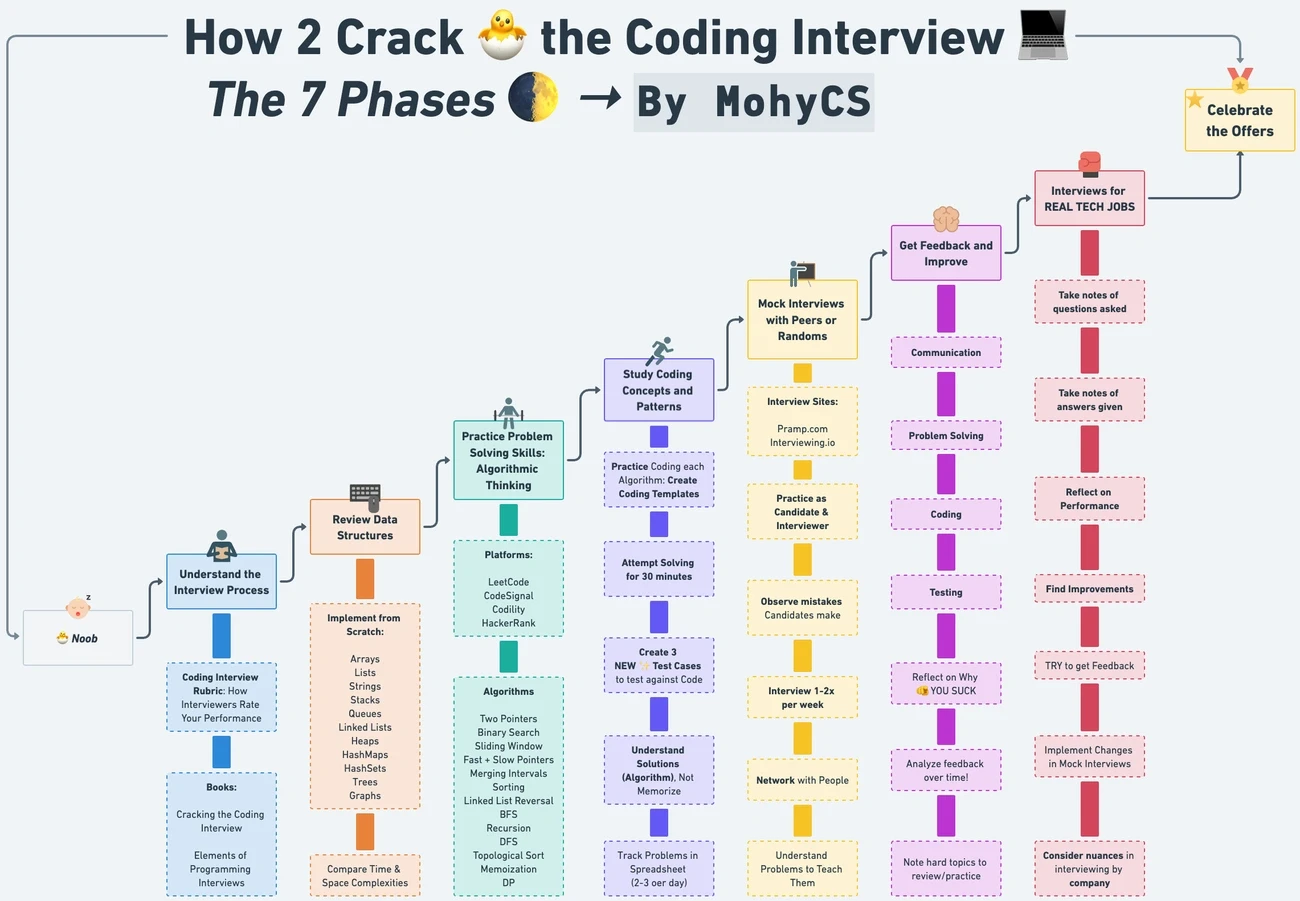

Basic CS Knowledge Rubric

Image Source: mohycs - Medium

Computer science fundamentals serve as the foundation for technical interview evaluation. Interviewers assess candidates' grasp of core principles that transcend specific languages or frameworks.

Data structures and algorithms

Expectations scale dramatically with seniority. Junior candidates should demonstrate familiarity with common data structures like arrays, hashes, and sets. Mid-level engineers must select appropriate data structures based on problem context. Senior engineers exhibit knowledge of advanced structures—heaps, priority queues, and tries—showing comfort with complex implementations.

The key difference lies not in memorization but in application. Strong candidates explain why they chose a particular data structure over alternatives, demonstrating practical engineering judgment.

Time/space complexity

Complexity analysis reveals algorithmic thinking skills. Evaluation follows a clear progression:

Strong No Hire: Cannot assess algorithm efficiency or compare solutions No Hire: Recognizes complexity concepts but cannot articulate differences Mixed/Leaning Hire: Understands basic complexity analysis but struggles with detailed evaluation Strong Hire: Derives precise complexity including dominating terms quickly

Trade-off awareness

Exceptional candidates articulate why they selected specific approaches. This demonstrates both technical depth and practical engineering judgment. The strongest candidates identify multiple solution approaches, compare their relative merits, and explain how their chosen algorithm balances runtime, memory usage, and code simplicity.

Understanding trade-offs matters more than memorizing textbook solutions. Candidates should prepare to discuss not just what works, but why it works best for the given constraints.

Collaboration Rubric

!Image

Image Source: Exponent

Collaborative skills reveal how candidates function within engineering teams. Technical interviews create opportunities to observe interpersonal dynamics that predict real-world team effectiveness.

Team interaction signals

Strong collaboration manifests through specific behaviors that interviewers monitor closely:

Verbalize thought process when facing difficulties

Adapt gracefully under pressure

Communicate complex concepts clearly

Respond constructively to feedback

These signals help hiring managers evaluate not just technical abilities but teamwork potential. Technical interviewers focus on the concrete contribution candidates will bring to the team beyond individual coding skills.

Use of interviewer as resource

The interviewer-candidate dynamic mirrors future workplace interactions. When candidates struggle with coding challenges, skilled interviewers provide guidance without revealing solutions. Strong candidates demonstrate appropriate resource utilization by asking clarifying questions that demonstrate understanding, verbalizing their thought process to reveal thinking patterns, and seeking targeted assistance rather than complete solutions.

This "flight simulator" experience allows interviewers to assess how candidates might collaborate with senior team members in actual work settings.

Credit sharing

How candidates discuss past work reveals their collaboration mindset. Evaluators should note whether candidates acknowledge team contributions versus claiming sole credit, use inclusive language like "we developed" versus exclusively individual framing, and recognize others' ideas when building upon them.

Such behavior indicates candidates will foster inclusive team environments where credit is shared appropriately and ideas can flourish collectively.

Curiosity Rubric

Image Source: Johns Hopkins University

Curiosity indicators reveal long-term growth potential in candidates, often outweighing immediate technical skills. A strong interview rubric must assess this foundational trait that drives continuous improvement throughout an engineering career.

Self-directed learning

Interviewers score candidates from "Strong No" (demonstrates no interest in learning more) to "Strong Yes" (displays an insatiable appetite for learning with ample evidence of self-improvement). Curiosity need not be code-related—demonstrated interest in family genealogy, foreign cultures, or literary history can be equally revealing.

Follow-up questions

Follow-up questions serve as primary indicators of genuine curiosity. Junior candidates ask standard questions about tech stack or working hours without seeking deeper understanding. Senior candidates ask insightful questions about the company, pursue deeper context through additional questions, and demonstrate genuine interest in the answers.

Research and preparation

Research behaviors signal proactive curiosity. Top candidates conduct independent research on interviewers beforehand and describe satisfaction from solving mysteries—instances where they needed to determine why something was broken. Candidates should prepare questions that reveal their natural inquisitiveness during interview preparation.

Interviewers look for candidates who get excited when recognizing learning opportunities rather than those who accept statements at face value.

Awareness Rubric

Image Source: Medium Engineering

Self-awareness serves as a reliable predictor of long-term development potential in technical interviews. Interview rubrics must evaluate how candidates recognize their limitations and respond to guidance.

Self-assessment ability

Candidates demonstrate self-assessment through realistic evaluation of their skills and limitations. Junior engineers recognize basic strengths and weaknesses. Senior candidates articulate nuanced understanding of their capabilities relative to industry standards. Strong candidates identify when they're stuck and verbalize their thought process without prompting.

Feedback response

Response to feedback provides crucial evaluation signals. Interviewers typically score candidates on a four-point scale:

Strong Hire: Incorporates feedback quickly and seeks deeper understanding

Leaning Hire: Applies feedback promptly but doesn't seek additional context

Leaning Don't Hire: Implements suggestions slowly with limited comprehension

Strong Don't Hire: Ignores hints or makes minimal use of guidance

Growth mindset

Growth mindset—the belief that abilities develop through dedication and continuous learning—underpins successful engineering careers. Effective candidates practice growth mindset signals:

View setbacks as learning opportunities

Analyze failures for improvement areas

Adjust approaches based on new information

Successful candidates evaluate progress against specific goals, seek diverse feedback perspectives, and create actionable development plans.

Empathy Rubric

Image Source: Medium Engineering

Empathy distinguishes exceptional engineers from merely competent ones in technical interviews. Strong interview rubrics assess candidates' ability to understand multiple perspectives and anticipate others' needs.

Understanding others' perspectives

Empathy evaluation follows a clear progression. Candidates earning "Strong No" ratings use hostile language about former colleagues, describing people with differing opinions as "stupid". Those receiving "No" ratings dismiss alternative viewpoints without consideration, unable to explain contextual factors behind decisions they disagree with.

"Mixed" candidates demonstrate limited ability to understand others' perspectives without full sympathy. "Yes" candidates decline opportunities to blame others without providing context and recognize the impact of their actions on colleagues. "Strong Yes" candidates thoroughly understand others' viewpoints before judging and show balanced assessment of challenges.

Code maintainability

Empathy manifests through code quality. Junior candidates focus solely on functionality. Senior engineers demonstrate empathy by creating well-documented, clearly named variables for future maintainers' benefit. This practical empathy serves as a reliable signal even in purely technical interviews where interpersonal interactions remain limited.

Team sensitivity

Leadership roles require team sensitivity assessment. Strong candidates demonstrate concern when people outside their peer group face unfair treatment. Candidates should prepare examples showing how they've considered colleagues' perspectives and built inclusive environments. The most effective rubrics evaluate whether candidates can articulate others' worldviews and show consideration beyond their immediate technical contributions.

Values Alignment Rubric

Image Source: Boulo Solutions

Values alignment serves as a decisive factor that technical skills alone cannot predict. An effective interview rubric must assess whether candidates will uphold organizational principles when facing real workplace challenges.

Trust-building behaviors

Trust-building capacity directly impacts professional relationships and business outcomes. Interviewers evaluate trust on a progressive scale from candidates who show inconsistency between words and actions to those who maintain integrity despite personal costs. Strong candidates provide specific examples with concrete actions rather than vague claims about being "trustworthy." They can describe situations where they maintained trust despite significant challenges.

Integrity and honesty

Integrity concerns keeping one's word under pressure. Exceptional candidates articulate when they made promises they couldn't keep and how they handled those situations responsibly. Candidates should prepare examples showing how they've honored commitments or managed situations where they couldn't deliver as promised. Many hiring regrets stem from integrity issues rather than technical deficiencies.

Alignment with company mission

Every interview scoring rubric should evaluate candidates against specific organizational values. Companies assess candidates on motivation, proactiveness, empathy, communication style, and growth mindset. The strongest interview rubric templates align evaluation criteria with core organizational principles, recognizing that skills can be taught while values alignment cannot.

Resoluteness Rubric

Image Source: Tech Interview Handbook

Resoluteness—the ability to maintain performance under pressure—serves as a vital dimension in technical interview rubrics. Research confirms this: candidates solving problems under observation performed at nearly half their capability compared to when working privately.

Persistence under pressure

Evaluating persistence requires observing how candidates handle stress. Junior engineers should demonstrate basic resilience, staying focused despite mild pressure. Mid-level engineers must maintain problem-solving capability through significant challenges. Senior engineers should show unwavering focus regardless of pressure intensity.

Handling setbacks

Technical interviews intentionally present obstacles to assess recovery abilities. Studies show nervousness "taints" interviewers' perception of competence, even when candidates answer correctly. Various groups experience pressure differently—in one small study, all women participants who interviewed publicly failed, while all who worked privately passed.

Follow-through

Effective interview scoring rubrics measure candidates' commitment to completion. Strong candidates demonstrate systematic approaches even when facing roadblocks. Successful strategies include taking time to gather thoughts before answering, using pseudocode to organize thinking, and verbalizing thought processes to maintain focus.

Technical interviews often function more like stress tests than assessments of actual programming ability. Preparation should include practicing calmness techniques alongside technical skills.

Perspective Rubric

Image Source: Exponent

Perspective diversity separates exceptional candidates from competent ones in technical interviews. Teams with diverse backgrounds and viewpoints perform better and create more innovative solutions.

Unique viewpoints

Strong interview rubrics evaluate whether candidates offer fresh perspectives the organization lacks. Junior candidates demonstrate basic understanding of alternative viewpoints. Senior candidates actively identify gaps in current thinking. The strongest candidates teach interviewers something new or solve problems in ways the company hasn't encountered.

Diversity of thought

Life experiences shape how engineers approach problems. Gender, race, country of origin, languages spoken, military service, education, and socioeconomic background all influence perspective. Interviewers must avoid direct questions about protected categories. Instead, use open-ended prompts like "What experiences have shaped your worldview?".

Product improvement ideas

Product enhancement suggestions reveal perspective concretely. Top candidates arrive with thoughtful suggestions based on thorough research. They identify underserved communities whose needs the company hasn't addressed. Candidates should consider how their unique background might enhance existing products or open new markets.

Strong perspective signals include identifying overlooked user groups, proposing solutions from different cultural contexts, and questioning assumptions others take for granted. The best candidates don't just answer questions—they help interviewers see problems differently.

Time Management Rubric

Image Source: Exponent

Time management assessment reveals work habits that coding skills alone cannot surface. Interview rubrics must evaluate how engineers handle limited resources under pressure.

Urgency and prioritization

Junior candidates should demonstrate basic task prioritization. Senior engineers must exhibit sophisticated approaches to complex work management. When faced with multiple deadlines, strong candidates assess urgency and importance using prioritization matrices. Interviewers evaluate whether candidates break down tasks into manageable steps and track progress systematically.

Deadline adherence

Consistency in meeting deadlines serves as a core evaluation criterion. Interviewers ask candidates to describe specific instances of juggling multiple projects simultaneously. Top performers explain their planning methods, scheduling approaches, and tools used to monitor progress toward deadlines.

Efficiency in interviews

Interview settings function as time management assessments. Skilled candidates balance quality with speed when working under constraints. Strong candidates demonstrate structured thinking by:

Breaking problems into logical steps

Stating assumptions upfront

Weighing trade-offs before implementation

Effective communication remains crucial for deadline management—ensuring everyone stays aligned toward meeting shared timelines.

Leadership Rubric

Image Source: Exponent

Leadership capability separates strong senior candidates from exceptional ones in technical interviews. Staff-level engineers must demonstrate their ability to amplify team impact beyond individual contributions, even without formal management titles.

Mentorship and guidance

Strong candidates exhibit systematic approaches to knowledge transfer rather than isolated mentoring instances. Staff-level engineers create organization-wide learning systems that scale beyond direct relationships. Interviewers look for evidence of programs that helped others grow professionally—structured design reviews, documented lessons from incidents, or rotation programs pairing senior engineers with newcomers across teams.

Vision and influence

Leadership evaluation focuses on influence without authority—how candidates navigate disagreements between peers with competing priorities. Exceptional candidates demonstrate specific abilities:

• Create psychological safety for productive disagreement • Surface technical criteria everyone agrees on

• Transform debates into joint fact-finding missions • Convert ego-driven conflicts into shared ownership

Delegation and empowerment

Effective delegation requires setting clear expectations while providing appropriate autonomy. Top candidates articulate how they establish concrete outcomes, define guardrails, and assign decision rights when delegating work. They demonstrate matching responsibilities to team members' development goals—viewing delegation as an opportunity for growth rather than task distribution.

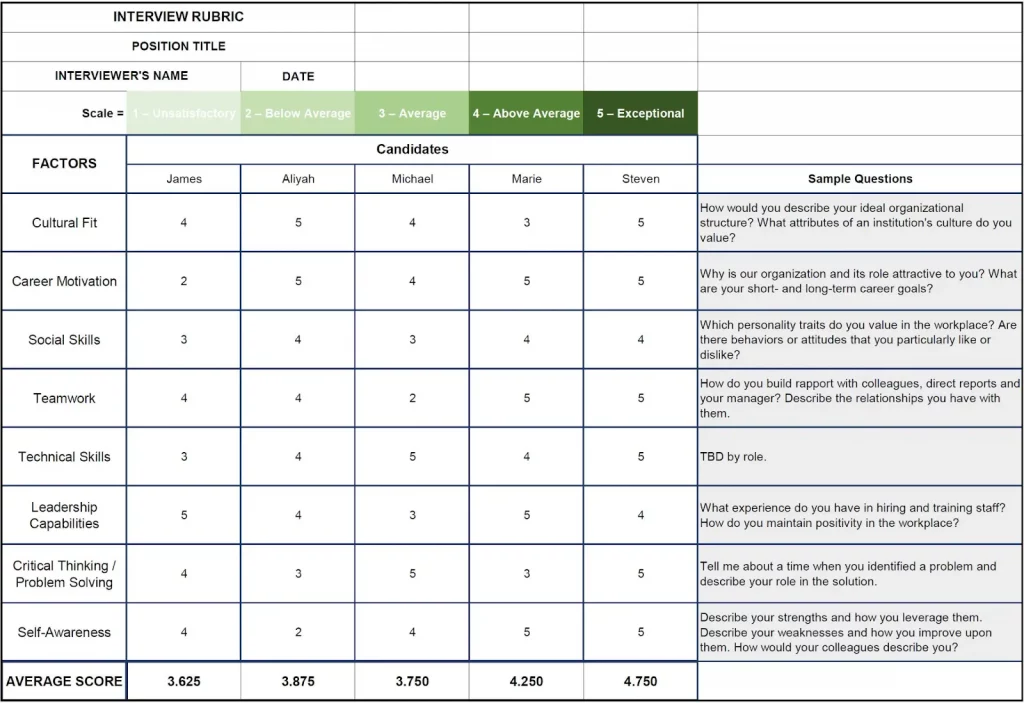

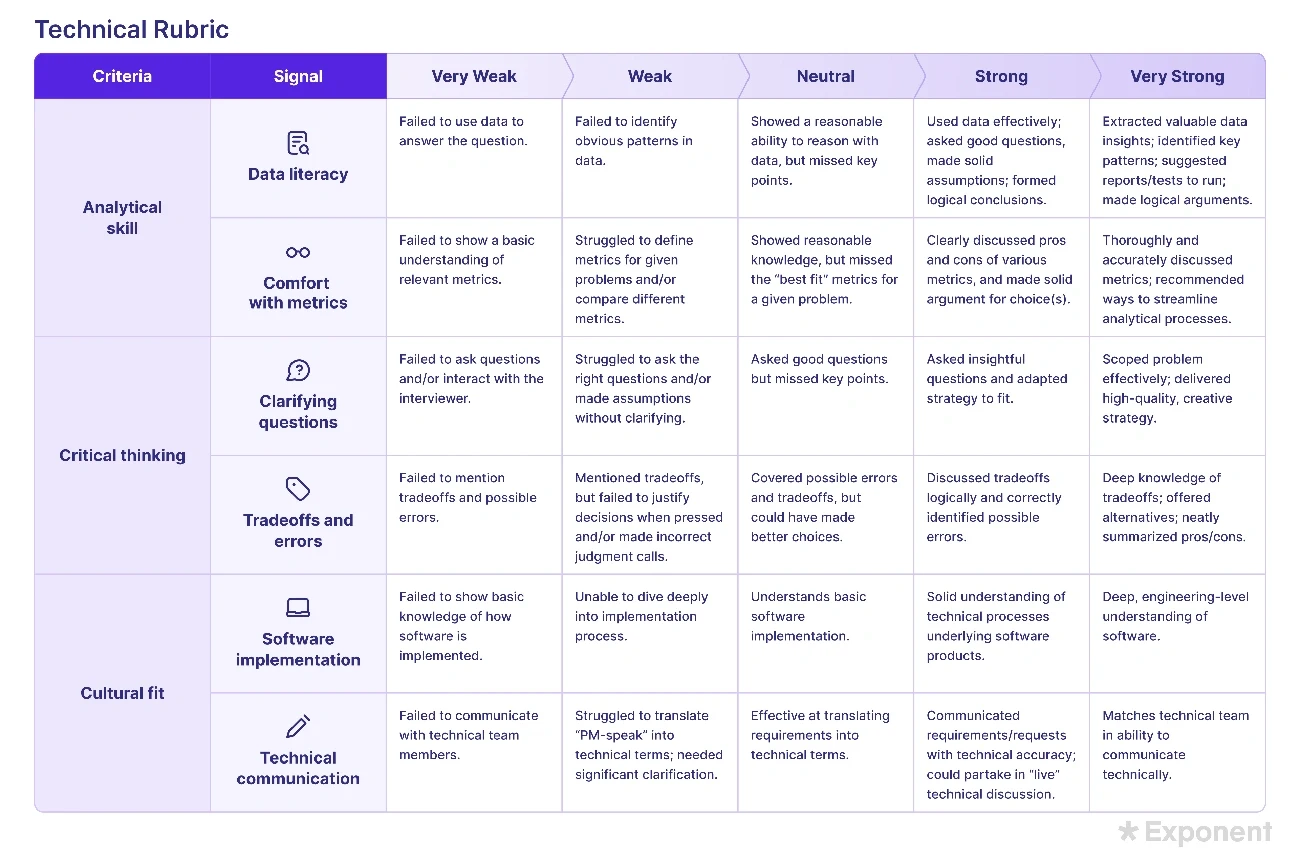

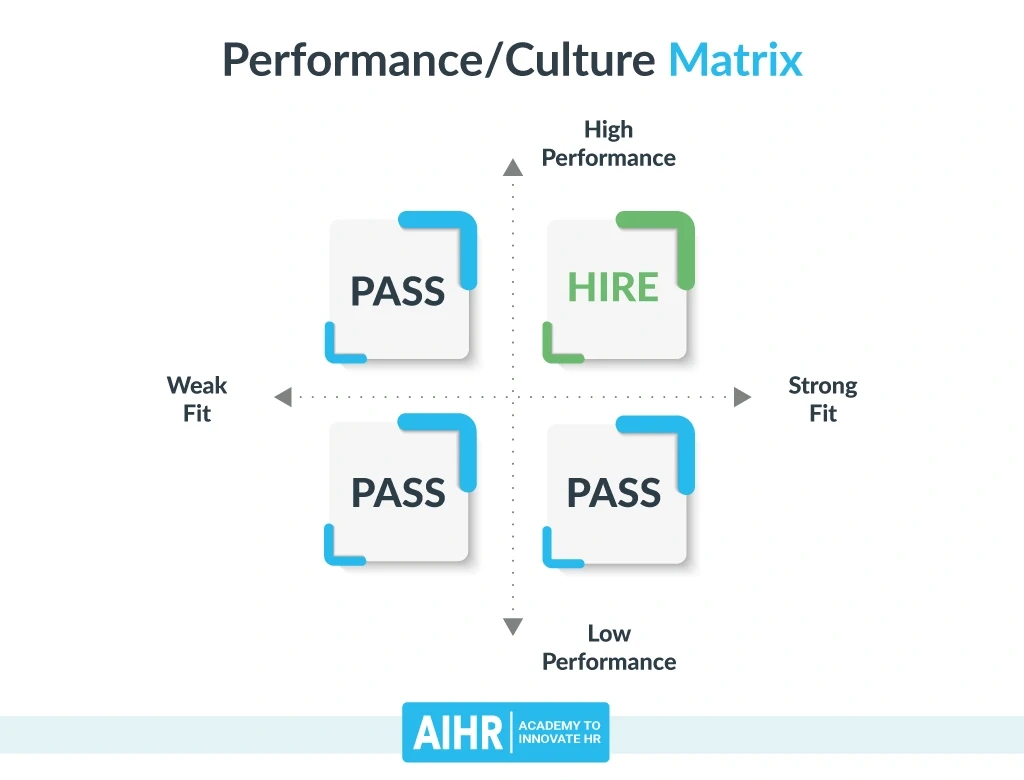

Cultural Fit Rubric

Image Source: AIHR

Cultural alignment provides crucial insights beyond technical abilities in interview evaluation. Cultural fit assessment examines how candidates' work approaches and values complement existing team dynamics.

Work style compatibility

Cultural fit interviews evaluate whether a candidate's work approach aligns with organizational culture. Strong candidates articulate their preferences clearly—describing communication styles, team versus solo work preferences, and motivational factors. Interview scoring rubrics examine candidates' responses to questions about feedback preferences, deadline management approaches, and work-life balance expectations.

Team dynamics

Interviewers assess team compatibility through questions about conflict resolution, past collaboration experiences, and typical group roles. Multiple team members in interviews strengthen cultural fit evaluation as they provide diverse perspectives on candidate interactions. Well-constructed interview rubric templates document both observable behaviors and detailed examples supporting scores.

Adaptability to norms

Candidates demonstrating flexibility toward established norms typically receive higher cultural fit ratings. Adaptability assessment focuses on candidates' willingness to learn and embrace change rather than conformity. Candidates should prepare to discuss instances where they successfully adjusted to different workplace cultures or team dynamics.

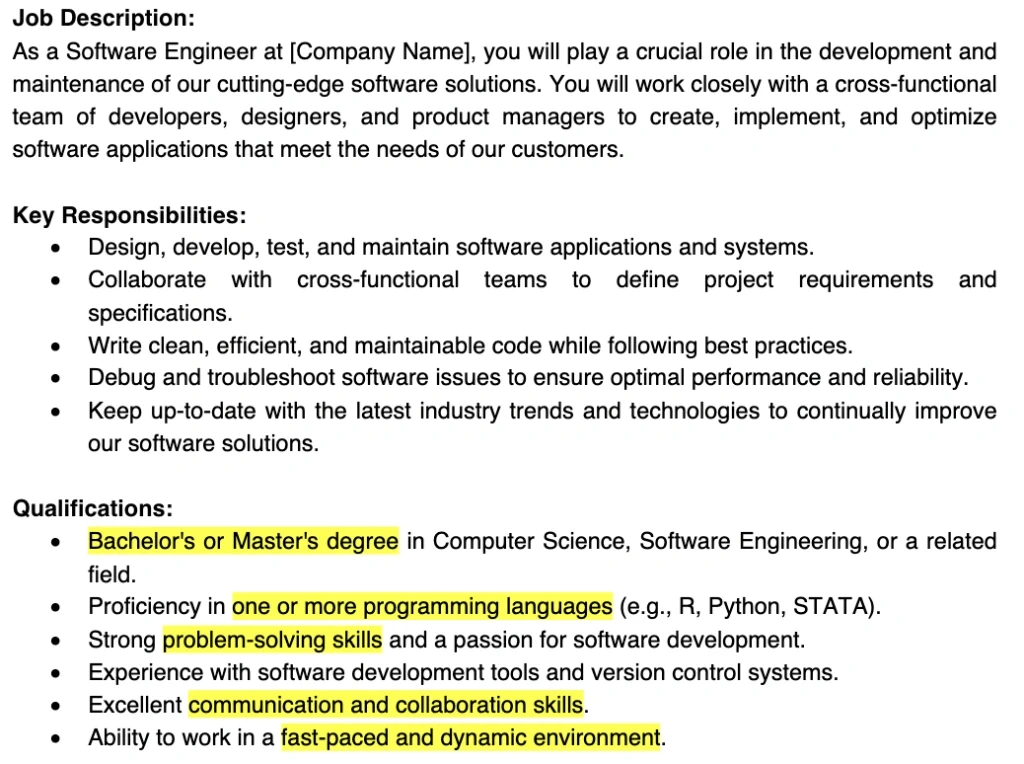

Comparison Table

These rubrics provide a complete evaluation framework across all engineering levels. The table below summarizes expectations, focus areas, and scoring criteria for each dimension.

Rubric Name | Key Focus Areas | Junior Level Expectations | Senior Level Expectations | Evaluation Scale/Criteria |

|---|---|---|---|---|

Communication | Clarity, Technical Vocabulary, Explanation Skills | Basic clarification skills, explain approach | Organize thoughts exceptionally well, precise communication, minimal prompting needed | Strong No Hire (unclear/silent) to Strong Hire (thorough, well-organized) |

Problem Solving | Approach, Optimization, Trade-offs | Develop working solution with adequate organization | Exceptional problem decomposition, systematic solving, evaluate multiple approaches | 4-point scale: Strong No Hire to Strong Hire based on approach and optimization |

Technical Competency | Code Quality, Language Features | Basic syntax knowledge, few errors | Flawless code, exemplary language knowledge, multiple coding approaches | 4-point scale: 1 (major errors) to 4 (clean code, outstanding understanding) |

Testing/Debugging | Test Cases, Bug Identification | Basic test case validation | Comprehensive testing strategies, anticipate edge cases | Strong No Hire (no testing) to Strong Hire (identified all edge cases) |

System Design | Architecture, Scalability | N/A | Deep expertise in multiple domains, novel insights | Breadth, Depth, Proactiveness evaluation |

Autonomy | Initiative, Decision-making | Use interviewers as resources while maintaining ownership | Control interview pacing, present solutions with minimal prompting | 5-point scale: Strong No to Strong Yes based on independence |

Code Fluency | Syntax, Libraries, Readability | Basic language constructs with few errors | Code without significant pauses, idiomatic code by default | Based on syntax accuracy and standard library usage |

Basic CS Knowledge | Data Structures, Algorithms | Familiarity with common data structures | Knowledge of advanced structures, precise complexity analysis | 4-point scale based on complexity understanding |

Collaboration | Team Interaction, Resource Usage | N/A | Foster inclusive team environments, appropriate credit sharing | Based on observable team behaviors and interaction patterns |

Curiosity | Learning Drive, Questions | Standard questions about tech stack/hours | Insightful questions, deeper context seeking | Strong No (no interest) to Strong Yes (insatiable learning appetite) |

Awareness | Self-assessment, Feedback | Recognize basic strengths/weaknesses | Articulate nuanced understanding of capabilities | 4-point scale based on feedback response and growth mindset |

Values Alignment | Trust, Integrity | N/A | Maintain integrity despite personal costs | Progressive scale based on trust-building behaviors |

Time Management | Prioritization, Efficiency | Basic task prioritization | Sophisticated approaches to complex work management | Based on deadline adherence and efficiency |

Leadership | Mentorship, Vision | N/A | Create organization-wide learning systems, influence without authority | Based on mentorship impact and vision implementation |

Cultural Fit | Work Style, Team Dynamics | N/A | Clear articulation of preferences, adaptability to norms | Based on work style compatibility and team dynamics |

Conclusion

Technical interview rubrics serve as essential tools for engineering teams seeking fair, consistent, and effective candidate evaluations. Their systematic approach reduces bias while simultaneously improving hiring accuracy by over 34%. Teams must therefore consider multiple dimensions when assessing candidates rather than focusing solely on coding abilities.

Undoubtedly, the rubrics presented throughout this article demonstrate how expectations evolve across seniority levels. Junior engineers typically need to show basic problem-solving capabilities and syntax knowledge, albeit with guidance. Mid-level candidates must demonstrate greater autonomy, cleaner code implementation, and deeper technical understanding. Senior engineers face expectations for flawless execution, comprehensive testing strategies, and the ability to evaluate multiple approaches with minimal prompting.

Beyond technical skills, these rubrics emphasize equally important soft skills. Communication, collaboration, curiosity, and self-awareness often differentiate exceptional candidates from merely competent ones. Staff-level engineers must additionally showcase leadership capabilities through mentorship, organizational influence, and vision-setting without formal authority.

Structured evaluation frameworks benefit both interviewers and candidates. Interviewers gain consistent assessment criteria that allow fair comparison between candidates while reducing personal bias. Candidates receive clearer expectations for each role level, helping them prepare appropriately and understand advancement requirements.

Companies implementing these comprehensive rubrics report significant benefits. Teams communicate more effectively through standardized evaluation processes, increasing productivity by up to 25%. Organizations using structured frameworks have conducted nearly 100,000 successful technical interviews for companies like Databricks, MongoDB, and Flatiron Health.

The future of technical hiring lies not in arbitrary personal judgments but rather in observable behaviors measured against clear expectations. Whether conducting interviews or preparing for one, these 40 expert-verified rubrics provide a complete framework for creating more predictable, fair, and effective technical assessments across all engineering levels.

Accordingly, hiring managers should implement structured rubrics tailored to their organization's needs while ensuring alignment with company values. Candidates should likewise familiarize themselves with these evaluation dimensions, preparing examples that demonstrate their capabilities across multiple assessment areas. Through this systematic approach, the technical interview process transforms from a subjective judgment into a reliable predictor of on-the-job success.

FAQs

Q1. What are the key components of an effective technical interview rubric? An effective technical interview rubric typically includes assessment of communication skills, problem-solving abilities, technical competency, testing and debugging skills, and system design capabilities. It should also evaluate soft skills like autonomy, curiosity, and cultural fit.

Q2. How do expectations differ for junior vs senior engineers in technical interviews? Junior engineers are usually expected to demonstrate basic problem-solving skills and syntax knowledge, while senior engineers should show exceptional problem decomposition, flawless code implementation, and the ability to evaluate multiple approaches with minimal prompting. Seniors are also expected to exhibit stronger leadership and system design capabilities.

Q3. What role does cultural fit play in technical interviews? Cultural fit assessment examines how a candidate's work approach and values align with the existing team dynamics. It typically evaluates work style compatibility, adaptability to company norms, and potential impact on team dynamics. This aspect is particularly important for senior roles where candidates are expected to influence team culture.

Q4. How important is time management in technical interviews? Time management is a crucial aspect of technical interviews. It reveals how candidates handle limited resources under pressure. Interviewers often assess a candidate's ability to prioritize tasks, adhere to deadlines, and work efficiently. Strong time management skills are particularly important for senior roles where complex project management is expected.

Q5. What is the significance of using structured rubrics in technical interviews? Using structured rubrics in technical interviews leads to more consistent, fair, and effective evaluations. They help reduce bias, improve hiring accuracy by over 34%, and allow for better comparison between candidates. Structured rubrics also provide clearer expectations for candidates and help interviewers assess multiple dimensions beyond just coding abilities.

About the Author

Nathan Trousdell is the Founder & CEO of Navero, an AI-powered hiring platform rethinking how companies find talent and how candidates grow their careers. He has led product, engineering, and AI/ML teams across global startups and scale-ups, co-founding Fraudio (a payments fraud detection company that raised $10M) and helping scale Payvision through to its $400M acquisition by ING.

Nathan writes on the future of work, hiring fairness, and how AI must improve - not replace- human decision-making in hiring. He combines nearly two decades of experience in finance, technology, and entrepreneurship with a passion for empowering both teams and talent, ensuring hiring is fairer, faster, and more human.

Join our newsletter list

Sign up to get the most recent blog articles in your email every week.